capsul.study_config module¶

Configuration of Capsul and external software operated through capsul.

capsul.study_config.config_utils submodule¶

Utility functions for configuration

Functions¶

environment()¶

capsul.study_config.memory submodule¶

Memory caching. Probably mostly obsolete, this code is not much used now.

Classes¶

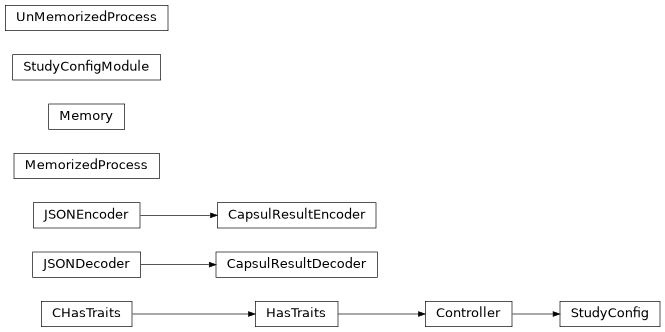

UnMemorizedProcess¶

MemorizedProcess¶

CapsulResultEncoder¶

Memory¶

Functions¶

get_process_signature()¶

has_attribute()¶

file_fingerprint()¶

- class capsul.study_config.memory.CapsulResultDecoder(*args, **kargs)[source]¶

Deal with ProcessResult in json.

object_hook, if specified, will be called with the result of every JSON object decoded and its return value will be used in place of the givendict. This can be used to provide custom deserializations (e.g. to support JSON-RPC class hinting).object_pairs_hook, if specified will be called with the result of every JSON object decoded with an ordered list of pairs. The return value ofobject_pairs_hookwill be used instead of thedict. This feature can be used to implement custom decoders. Ifobject_hookis also defined, theobject_pairs_hooktakes priority.parse_float, if specified, will be called with the string of every JSON float to be decoded. By default this is equivalent to float(num_str). This can be used to use another datatype or parser for JSON floats (e.g. decimal.Decimal).parse_int, if specified, will be called with the string of every JSON int to be decoded. By default this is equivalent to int(num_str). This can be used to use another datatype or parser for JSON integers (e.g. float).parse_constant, if specified, will be called with one of the following strings: -Infinity, Infinity, NaN. This can be used to raise an exception if invalid JSON numbers are encountered.If

strictis false (true is the default), then control characters will be allowed inside strings. Control characters in this context are those with character codes in the 0-31 range, including'\t'(tab),'\n','\r'and'\0'.

- class capsul.study_config.memory.CapsulResultEncoder(*, skipkeys=False, ensure_ascii=True, check_circular=True, allow_nan=True, sort_keys=False, indent=None, separators=None, default=None)[source]¶

Deal with ProcessResult in json.

Constructor for JSONEncoder, with sensible defaults.

If skipkeys is false, then it is a TypeError to attempt encoding of keys that are not str, int, float or None. If skipkeys is True, such items are simply skipped.

If ensure_ascii is true, the output is guaranteed to be str objects with all incoming non-ASCII characters escaped. If ensure_ascii is false, the output can contain non-ASCII characters.

If check_circular is true, then lists, dicts, and custom encoded objects will be checked for circular references during encoding to prevent an infinite recursion (which would cause an RecursionError). Otherwise, no such check takes place.

If allow_nan is true, then NaN, Infinity, and -Infinity will be encoded as such. This behavior is not JSON specification compliant, but is consistent with most JavaScript based encoders and decoders. Otherwise, it will be a ValueError to encode such floats.

If sort_keys is true, then the output of dictionaries will be sorted by key; this is useful for regression tests to ensure that JSON serializations can be compared on a day-to-day basis.

If indent is a non-negative integer, then JSON array elements and object members will be pretty-printed with that indent level. An indent level of 0 will only insert newlines. None is the most compact representation.

If specified, separators should be an (item_separator, key_separator) tuple. The default is (’, ‘, ‘: ‘) if indent is

Noneand (‘,’, ‘: ‘) otherwise. To get the most compact JSON representation, you should specify (‘,’, ‘:’) to eliminate whitespace.If specified, default is a function that gets called for objects that can’t otherwise be serialized. It should return a JSON encodable version of the object or raise a

TypeError.- default(obj)[source]¶

Implement this method in a subclass such that it returns a serializable object for

o, or calls the base implementation (to raise aTypeError).For example, to support arbitrary iterators, you could implement default like this:

def default(self, o): try: iterable = iter(o) except TypeError: pass else: return list(iterable) # Let the base class default method raise the TypeError return JSONEncoder.default(self, o)

- class capsul.study_config.memory.MemorizedProcess(process, cachedir, timestamp=None, verbose=1)[source]¶

Callable object decorating a capsul process for caching its return values each time it is called.

All values are cached on the filesystem, in a deep directory structure. Methods are provided to inspect the cache or clean it.

Initialize the MemorizedProcess class.

- Parameters:

process (capsul process) – the process instance to wrap.

cachedir (string) – the directory in which the computation will be stored

timestamp (float (optional)) – The reference time from which times in tracing messages are reported.

callback (callable (optional)) – an optional callable called each time after the function is called.

verbose (int) – if different from zero, print console messages.

- class capsul.study_config.memory.Memory(cachedir)[source]¶

Memory context to provide caching for processes.

- `cachedir`

the location for the caching. If None is given, no caching is done.

- Type:

string

Initialize the Memory class.

- Parameters:

base_dir (string) – the directory name of the location for the caching.

- cache(process, verbose=1)[source]¶

Create a proxy of the given process in order to only execute the process for input parameters not cached on disk.

- Parameters:

process (capsul process) – the capsul Process to be wrapped and cached.

verbose (int) – if different from zero, print console messages.

- Returns:

proxy_process – the returned object is a MemorizedProcess object, that behaves as a process object, but offers extra methods for cache lookup and management.

- Return type:

MemorizedProcess object

Examples

Create a temporary memory folder

>>> from tempfile import mkdtemp >>> mem = Memory(mkdtemp())

Here we create a callable that can be used to apply an fsl.Merge interface to files

>>> from capsul.process import get_process_instance >>> nipype_fsl_merge = get_process_instance( ... "nipype.interfaces.fsl.Merge") >>> fsl_merge = mem.cache(nipype_fsl_merge)

Now we apply it to a list of files. We need to specify the list of input files and the dimension along which the files should be merged.

>>> results = fsl_merge(in_files=['a.nii', 'b.nii'], dimension='t')

We can retrieve the resulting file from the outputs:

>>> results.outputs._merged_file

- class capsul.study_config.memory.UnMemorizedProcess(process, verbose=1)[source]¶

This class replaces MemorizedProcess when there is no cache. It provides an identical API but does not write anything on disk.

Initialize the UnMemorizedProcess class.

- Parameters:

process (capsul process) – the process instance to wrap.

verbose (int) – if different from zero, print console messages.

- capsul.study_config.memory.file_fingerprint(a_file)[source]¶

Computes the file fingerprint.

Do not consider the file content, just the fingerprint (ie. the mtime, the size and the file location).

- Parameters:

a_file (string) – the file to process.

- Returns:

fingerprint – the file location, mtime and size.

- Return type:

- capsul.study_config.memory.get_process_signature(process, input_parameters)[source]¶

Generate the process signature.

- capsul.study_config.memory.has_attribute(trait, attribute_name, attribute_value=None, recursive=True)[source]¶

Checks if a given trait has an attribute and optionally if it is set to particular value.

- Parameters:

- Returns:

res – True if input given trait has an attribute and optionally if it is set to a particular value.

- Return type:

capsul.study_config.process_instance submodule¶

Process instance factory

Functions¶

is_process()¶

is_pipeline_node()¶

get_process_instance()¶

get_node_class()¶

get_node_instance()¶

- capsul.study_config.process_instance.get_node_class(node_type)[source]¶

Get a custom node class from module + class string. The class name is optional if the module contains only one node class. It is OK to pass a Node subclass or a Node instance also.

- capsul.study_config.process_instance.get_node_instance(node_type, pipeline, conf_dict=None, name=None, **kwargs)[source]¶

Get a custom node instance from a module + class name (see

get_node_class()) and a configuration dict or Controller. The configuration contains parameters needed to instantiate the node type. Each node class may specify its parameters via its class method configure_node.- Parameters:

node_type (str or Node subclass or Node instance) – node type to be built. Either a class (Node subclass) or a Node instance (the node will be re-instantiated), or a string describing a module and class.

pipeline (Pipeline) – pipeline in which the node will be inserted.

conf_dict (dict or Controller) – configuration dict or Controller defining parameters needed to build the node. The controller should be obtained using the node class’s configure_node() static method, then filled with the desired values. If not given the node is supposed to be built with no parameters, which will not work for every node type.

kwargs – default values of the node instance parameters.

- capsul.study_config.process_instance.get_process_instance(process_or_id, study_config=None, **kwargs)[source]¶

Return a Process instance given an identifier.

Note that it is convenient to create a process from a StudyConfig instance: StudyConfig.get_process_instance()

The identifier is either:

a derived Process class.

a derived Process class instance.

a Nipype Interface instance.

a Nipype Interface class.

a string description of the class <module>.<class>.

a string description of a function to warp <module>.<function>.

a string description of a module containing a single process <module>

a string description of a pipeline <module>.<fname>.xml.

an XML filename for a pipeline.

a JSON filename for a pipeline.

a Python (.py) filename with process name in it: /path/process_source.py#ProcessName.

a Python (.py) filename for a file containing a single process.

Default values of the process instance are passed as additional parameters.

- Parameters:

process_or_id (instance or class description (mandatory)) – a process/nipype interface instance/class or a string description.

study_config (StudyConfig instance (optional)) – A Process instance belongs to a StudyConfig framework. If not specified the study_config can be set afterwards.

kwargs – default values of the process instance parameters.

- Returns:

result – an initialized process instance.

- Return type:

capsul.study_config.run submodule¶

Process and pipeline execution management

Functions¶

run_process()¶

- capsul.study_config.run.run_process(output_dir, process_instance, generate_logging=False, verbose=0, configuration_dict=None, cachedir=None, **kwargs)[source]¶

Execute a capsul process in a specific directory.

- Parameters:

output_dir (str (mandatory)) – the folder where the process will write results.

process_instance (Process (mandatory)) – the capsul process we want to execute.

cachedir (str (optional, default None)) – save in the cache the current process execution. If None, no caching is done.

generate_logging (bool (optional, default False)) – if True save the log stored in the process after its execution.

verbose (int) – if different from zero, print console messages.

configuration_dict (dict (optional)) – configuration dictionary

- Returns:

returncode (ProcessResult) – contains all execution information.

output_log_file (str) – the path to the process execution log file.

capsul.study_config.study_config submodule¶

Main StudyConfig class for configuration of Capsul software, directories etc.

Classes¶

StudyConfig¶

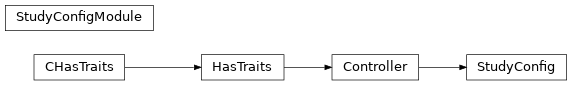

StudyConfigModule¶

Functions¶

default_study_config()¶

- class capsul.study_config.study_config.StudyConfig(study_name=None, init_config=None, modules=None, engine=None, **override_config)[source]¶

Class to store the study parameters and processing options.

StudyConfig is deprecated and will probably be removed in Capsul 3. Please use

CapsulEngineinstead and its construction function,capsul_engine()when possible.This in turn is used to evaluate a Process instance or a Pipeline.

StudyConfig has modules (see BrainVISAConfig, AFNIConfig, FSLConfig, MatlabConfig, ANTSConfig, SmartCachingConfig, SomaWorkflowConfig, SPMConfig, FOMConfig, MRTRIXConfig). Modules are initialized in the constructor, so their list has to be setup before instantiating StudyConfig. A default modules list is used when no modules are specified: StudyConfig.default_modules

StudyConfig configuration is loaded from a global file and then from a study specific file (based on study_name parameter). The global configuration file name is either in os.environ[‘CAPSUL_CONFIG’] or in “~/.config/capsul/config.json”. The study specific configuration file name is either defined in the global configuration or in “~/.config/capsul/<study_name>/config.json”.

from capsul.api import StudyConfig study_config = StudyConfig(modules=['SPMConfig', 'FomConfig']) # or: study_config = StudyConfig(modules=StudyConfig.default_modules + ['FomConfig'])

- create_output_directories¶

Create parent directories of all output File or Directory before running a process

- Type:

bool (default True)

- process_output_directory¶

Create a process specific output_directory by appending a subdirectory to output_directory. This subdirectory is named ‘<count>-<name>’ where <count> if self.process_counter and <name> is the name of the process.

- Type:

bool (default False)

Initialize the StudyConfig class

- Parameters:

study_name (Name of the study to configure. This name is used to) – identify specific configuration for a study.

init_config (if not None, must contain a dictionary that will be used) – to configure this StudyConfig (instead of reading configuration from configuration files).

modules (list of string (default self.default_modules).) – the names of configuration module classes that will be included in this study configuration.

engine (CapsulEngine) – this parameter is temporary, it just helps to handle the transition to

capsul.engine.CapsulEngine. Don’t use it in client code.override_config (dictionary) – The content of these keyword parameters will be set on the configuration after it has been initialized from configuration files (or from init_config).

- add_trait(name, *trait)[source]¶

Add a new trait.

- Parameters:

name (str (mandatory)) – the trait name.

trait (traits.api (mandatory)) – a valid trait.

- get_configuration_dict()[source]¶

Returns a json compatible dictionary containing current configuration.

- get_iteration_pipeline(pipeline_name, node_name, process_or_id, iterative_plugs=None, do_not_export=None, make_optional=None, **kwargs)[source]¶

Create a pipeline with an iteration node iterating the given process.

- Parameters:

pipeline_name (str) – pipeline name

node_name (str) – iteration node name in the pipeline

process_or_id (process description) – as in

get_process_instance()iterative_plugs (list (optional)) – passed to

Pipeline.add_iterative_process()do_not_export (list) – passed to

Pipeline.add_iterative_process()make_optional (list) – passed to

Pipeline.add_iterative_process()

- Returns:

pipeline

- Return type:

Pipelineinstance

- get_process_instance(process_or_id, **kwargs)[source]¶

Return a Process instance given an identifier.

The identifier is either:

a derived Process class.

a derived Process class instance.

a Nipype Interface instance.

a Nipype Interface class.

a string description of the class <module>.<class>.

a string description of a function to warp <module>.<function>.

a string description of a pipeline <module>.<fname>.xml.

an XML filename for a pipeline

Default values of the process instance are passed as additional parameters.

- Parameters:

process_or_id (instance or class description (mandatory)) – a process/nipype interface instance/class or a string description.

kwargs – default values of the process instance parameters.

- Returns:

result – an initialized process instance.

- Return type:

- get_trait(trait_name)[source]¶

Method to access the ‘trait_name’ study configuration element.

Notes

If the ‘trait_name’ element is not found, return None

- Parameters:

trait_name (str (mandatory)) – the trait name we want to access

- Returns:

trait – the trait we want to access

- Return type:

trait

- get_trait_value(trait_name)[source]¶

Method to access the value of the ‘trait_name’ study configuration element.

- initialize_modules()[source]¶

Modules initialization, calls initialize_module on each config module. This is not done during module instantiation to allow interactions between modules (e.g. Matlab configuration can influence Nipype configuration). Modules dependencies are taken into account in initialization.

- load_module(config_module_name, config)[source]¶

Load an optional StudyConfig module.

- Parameters:

config_module_name (Name of the module to load (e.g. "FSLConfig").)

config (dictionary containing the configuration of the study.)

- read_configuration()[source]¶

Find the configuration for the current study (whose name is defined in self study_name) and returns a dictionary that is a merge between global options and study specific options.

Global option are taken from environment variable CAPSUL_CONFIG if it is defined, otherwise from “~/.config/capsul/config.json” if it exists.

The configuration for a study can be defined the global configuration if it contains a dictionary in it “studies_config” option and if there is a key corresponding to self.study_name in this dictionary. If the corresponding value is a string, it must be a valid json configuration file name (either absolute or relative to the global configuration file). Otherwise, the corresponding value must be a dictionary containing study specific configuration values. If no study configuration is found from global configuration, then a file named “~/.config/capsul/%s/config.json” (where %s is self.study_name) is used if it exists.

- run(process_or_pipeline, output_directory=None, execute_qc_nodes=True, verbose=0, configuration_dict=None, **kwargs)[source]¶

- Method to execute a process or a pipeline in a study configuration

environment.

Depending on the studies_config settings, it may be a sequential run, or a parallel run, which can involve remote execution (through soma- workflow).

Only pipeline nodes can be filtered on the ‘execute_qc_nodes’ attribute.

A valid output directory is expected to execute the process or the pepeline without soma-workflow.

- Parameters:

process_or_pipeline (Process or Pipeline instance (mandatory)) – the process or pipeline we want to execute

output_directory (Directory name (optional)) – the output directory to use for process execution. This replaces self.output_directory but left it unchanged.

execute_qc_nodes (bool (optional, default False)) – if True execute process nodes that are tagged as qualtity control process nodes.

verbose (int) – if different from zero, print console messages.

configuration_dict (dict (optional)) – configuration dictionary

- save_configuration(file)[source]¶

Save study configuration as json file.

- Parameters:

file (file or str (mandatory)) – either a writable opened file or the path to the output json file.

- set_study_configuration(new_config)[source]¶

Method to set the new configuration of the study.

If a study configuration element can’t be updated properly, send an error message to the logger.

- Parameters:

new_config (ordered dict (mandatory)) – the structure that contain the default study configuration: see the class attributes to build this structure.

- class capsul.study_config.study_config.StudyConfigModule(study_config, configuration)[source]¶

StudyConfigmodule base class (abstract)- initialize_module()[source]¶

Method called to initialize selected study configuration modules on startup. This method does nothing but can be overridden by modules.

- property name¶

The name of a module that can be used in configuration to select modules to load.

capsul.study_config.config_modules submodule¶

StudyConfig configuration modules implementations

capsul.study_config.config_modules.attributes_config submodule¶

Attributes completion config module

Classes¶

AttributesConfig¶

- class capsul.study_config.config_modules.attributes_config.AttributesConfig(study_config, configuration)[source]¶

Attributes-based completion configuration module for StudyConfig

This module adds the following options (traits) in the

StudyConfigobject:- attributes_schema_paths: list of str (filenames)

attributes shchema module name

- attributes_schemas: dict(str, str)

attributes shchemas names

- process_completion: str (default:’builtin’)

process completion model name

- path_completion: str

path completion model name

capsul.study_config.config_modules.brainvisa_config submodule¶

Configuration module which links with Axon

Classes¶

BrainVISAConfig¶

- class capsul.study_config.config_modules.brainvisa_config.BrainVISAConfig(study_config, configuration)[source]¶

Configuration module allowing to use BrainVISA / Axon shared data in Capsul processes.

This module adds the following options (traits) in the

StudyConfigobject:- shared_directory: str (filename)

Study shared directory

capsul.study_config.config_modules.fom_config submodule¶

Config module for File Organization models (FOMs)

Classes¶

FomConfig¶

- class capsul.study_config.config_modules.fom_config.FomConfig(study_config, configuration)[source]¶

FOM (File Organization Model) configuration module for StudyConfig

Note

FomConfigneedsBrainVISAConfigto be part ofStudyConfigmodules.This module adds the following options (traits) in the

StudyConfigobject:- input_fom: str

input FOM

- output_fom: str

output FOM

- shared_fom: str

shared data FOM

- volumes_format: str

Format used for volumes

- meshes_format: str

Format used for meshes

- auto_fom: bool (default: True)

Look in all FOMs when a process is not found. Note that auto_fom looks for the first FOM matching the process to get completion for, and does not handle ambiguities. Moreover it brings an overhead (typically 6-7 seconds) the first time it is used since it has to parse all available FOMs.

- fom_path: list of directories

list of additional directories where to look for FOMs (in addition to the standard share/foms)

- use_fom: bool

Use File Organization Models for file parameters completion’

Methods:

capsul.study_config.config_modules.freesurfer_config submodule¶

FreeSurfer configuration module

Classes¶

FreeSurferConfig¶

- class capsul.study_config.config_modules.freesurfer_config.FreeSurferConfig(study_config, configuration)[source]¶

Class to set up freesurfer configuration.

Parse the ‘SetUpFreeSurfer.sh’ file and update dynamically the system environment.

Initialize the FreeSurferConfig class.

- Parameters:

study_config (StudyConfig object) – the study configuration we want to update in order to deal with freesurfer functions.

capsul.study_config.config_modules.fsl_config submodule¶

FSL configuration module

Classes¶

FSLConfig¶

capsul.study_config.config_modules.matlab_config submodule¶

Matlab configuration module

Classes¶

MatlabConfig¶

capsul.study_config.config_modules.nipype_config submodule¶

NiPype configuration module

Classes¶

NipypeConfig¶

- class capsul.study_config.config_modules.nipype_config.NipypeConfig(study_config, configuration)[source]¶

Nipype configuration.

In order to use nipype spm, fsl and freesurfer interfaces, we need to configure the nipype module.

Initialize the NipypeConfig class.

capsul.study_config.config_modules.smartcaching_config submodule¶

Process execution cache configuration module

Classes¶

SmartCachingConfig¶

capsul.study_config.config_modules.somaworkflow_config submodule¶

Soma-Workflow configuration module

Classes¶

ResourceController¶

SomaWorkflowConfig¶

- class capsul.study_config.config_modules.somaworkflow_config.ResourceController[source]¶

Configuration options for one Soma-Workflow computing resource

- path_translations¶

Soma-workflow paths translations mapping:

{local_path: (identifier, uuid)}

Initilaize the Controller class.

During the class initialization create a class attribute ‘_user_traits’ that contains all the class traits and instance traits defined by user (i.e. the traits that are not automatically defined by HasTraits or Controller). We can access this class parameter with the ‘user_traits’ method.

If user trait parameters are defined directly on derived class, this procedure call the ‘add_trait’ method in order to not share user traits between instances.

- class capsul.study_config.config_modules.somaworkflow_config.SomaWorkflowConfig(study_config, configuration)[source]¶

Configuration module for Soma-Workflow

Stores configuration options which are not part of Soma-Workflow own configuration, and used to run workflows from CAPSUL pipelines.

The configuration module may also store connected Soma-Workflow WorkflowController objects to allow monitoring and submitting workflows.

- somaworkflow_computing_resource¶

Soma-workflow computing resource to be used to run processing

- Type:

- somaworkflow_config_file¶

Soma-Workflow configuration file. Default:

$HOME/.soma_workflow.cfg- Type:

filename

- somaworkflow_keep_failed_workflows¶

Keep failed workflows after pipeline execution through StudyConfig

- Type:

- somaworkflow_keep_succeeded_workflows¶

Keep succeeded workflows after pipeline execution through StudyConfig

- Type:

- somaworkflow_computing_resources_config¶

Computing resource config dict, keys are resource ids. Values are

ResourceControllerinstances- Type:

- connect_resource(resource_id=None, force_reconnect=False)[source]¶

Connect a soma-workflow computing resource.

Sets the current resource to the given resource_id (transformed by get_resource_id() if None or “localhost” is given, for instance).

- Parameters:

resource_id (str (optional)) – resource name, may be None or “localhost”. If None, the current one (study_config.somaworkflow_computing_resource) is used, or the localhost if none is configured.

force_reconnect (bool (optional)) – if True, if an existing workflow controller is already connected, it will be disconnected (deleted) and a new one will be connected. If False, an existing controller will be reused without reconnection.

- Return type:

WorkflowController object

- disconnect_resource(resource_id=None)[source]¶

Disconnect a connected WorkflowController and removes it from the internal list

- get_resource_id(resource_id=None, set_it=False)[source]¶

Get a computing resource name according to the (optional) input resource_id and Soma-Workflow configuration.

For instance,

Noneor"localhost"will be transformed to the local host id.

- get_workflow_controller(resource_id=None)[source]¶

Get a connected WorkflowController for the given resource

capsul.study_config.config_modules.spm_config submodule¶

SPM configuration module

Classes¶

SPMConfig¶

- class capsul.study_config.config_modules.spm_config.SPMConfig(study_config, configuration)[source]¶

SPM configuration.

- There is two ways to configure SPM:

the first one requires to configure matlab and then to set the spm directory.

the second one is based on a standalone version of spm and requires to set the spm executable directory.